Roles and responsibilities in the data life cycle

Based on: Papadiamantis et al., 2020

© 2020 by the authors. Licensee MDPI

| Roles | Set objectives | Design Approach | Collect | Processing | Modelling / Analysis | Validate | Store | Share | Quality Control | Annotation | Determine Relevance | Apply | Confirm Effectiveness | Generalise | Communication / Education |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Creators | X | X | X | X | X | X | X | X | X | X | |||||

| Analysts | X | X | X | X | X | X | X | X | X | X | |||||

| Curators | X | X | X | X | X | X | X | ||||||||

| Managers | X | X | X | X | X | ||||||||||

| Customers | X | X | X | X | X | X | X | ||||||||

| Shepherds | X | X | X | X | X | X | X | X | X | X | X | X | X | X | X |

Colour coding is according to the stages in the data management life cycle.

Data customers:

Requestors, accessors, users, and re-users of the needed or produced data (evaluation of the scientific and technical FAIRification step by testing for the final goal of usability and reusability in real applications)

Data creator:

The experimentalists (wet lab and in silico) planning and generating the data (planning, acquisition, and manipulation in the data lifecycle, scientific FAIRification steps)

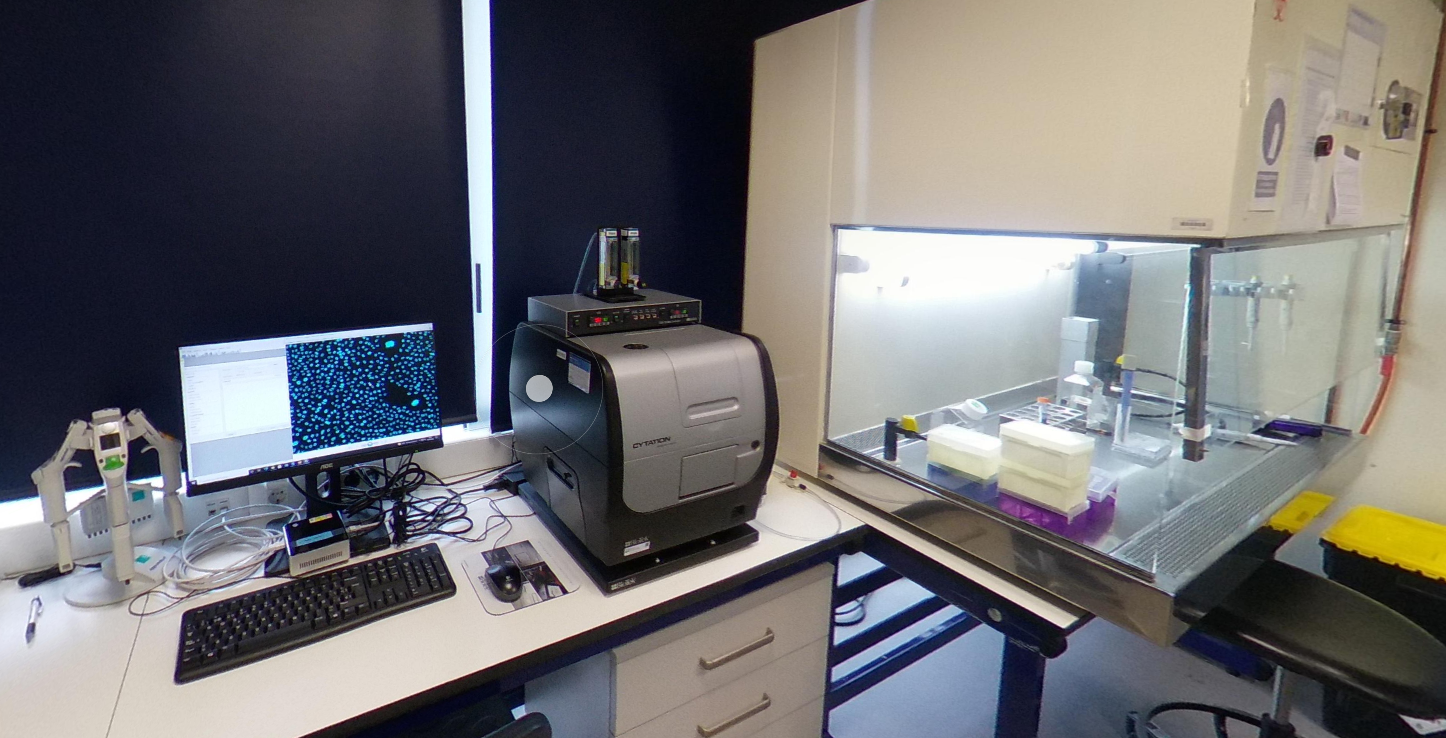

LEITAT lab tour  Have a look at one place where data is produced.

Have a look at one place where data is produced.

Data analyst:

Data handling, manipulation, analysis including modelling (processing and analysis, scientific FAIRification steps)

Data curator:

Data and metadata capturing and quality and completeness control (data manipulation and storage)

Data manager:

Data digitisation in a structured and harmonised format. Metadata implementation and link to data (storage and technical FAIRification steps)

Data steward:

Oversight or data governance role within an organization or project, and is responsible for ensuring the quality and fitness for purpose of the data assets, including the metadata for those data assets (adapted from Wikipedia).

Data shepherd:

A new role strongly encouraged here, who operates throughout the data lifecycle. For the data lifecycle ecosystem to be functional and successful, a moderation between its different parts is needed. In this way, it will be possible to “translate” and communicate between the different parties and guarantee the smooth transfer of data from one role to the other. Furthermore, and potentially most importantly, it will be possible to organise feedback loops to pass evaluations of the usefulness and completeness of the (meta)data coverage and to report issues and errors against the normal data flow to upstream roles for their immediate consideration and fixing and, in more severe cases, rethinking of the metadata concept. This mediation should be done by a trained data shepherd combining knowledge and insights on all other roles and requirements. The data shepherd can be described as an enhanced version of a data steward, who not only oversees the data management, handling and quality control processes, but can communicate in a clear and simple language with all parties and resolve any misunderstandings. Data shepherds need to combine experimental, computational and technical background and/or experience and be proficient enough to understand the context in which the different parties express themselves. They will need to lead the data quality control and FAIRness evaluation as well as the continuous optimisation of data workflows, including technical developments to facilitate data curation, annotation, and cleansing.

Role-specific training resources

ELIXIR RDMkit resources Even if ELIXIR is using a somewhat different system of roles (Data Steward: infrastructure, Data Steward: policy, Data Steward: research, and Researcher), the resources are especially useful for data stewards and shepherds.

FAIR Connect This (upcoming) platform is made for data stewards and provides details and recommendations for FAIR Enabling/Supporting Resources.

References

- Papadiamantis et al., 2020: Papadiamantis, A. G.; Klaessig, F. C.; Exner, T. E.; Hofer, S.; Hofstaetter, N.; Himly, M.; Williams, M. A.; Doganis, P.; Hoover, M. D.; Afantitis, A.; Melagraki, G.; Nolan, T. S.; Rumble, J.; Maier, D.; Lynch, I. Metadata Stewardship in Nanosafety Research: Community-Driven Organisation of Metadata Schemas to Support FAIR Nanoscience Data. Nanomaterials 2020, 10 (10), 2033. https://doi.org/10.3390/nano10102033.